March 30th, 2024

Unlocking the Secrets of CHAID

By Rahul Sonwalkar · 6 min read

Overview

In the vast realm of statistical analysis, various techniques allow researchers to delve deep into data, uncovering patterns and relationships. One such powerful tool is the Chi-square Automatic Interaction Detector, commonly known as CHAID. Developed by Gordon V. Kass in 1980, CHAID has become a go-to method for many analysts aiming to understand intricate relationships between variables. In this article, we'll embark on a journey to understand the nuances of CHAID and its significance in modern research.

What is CHAID?

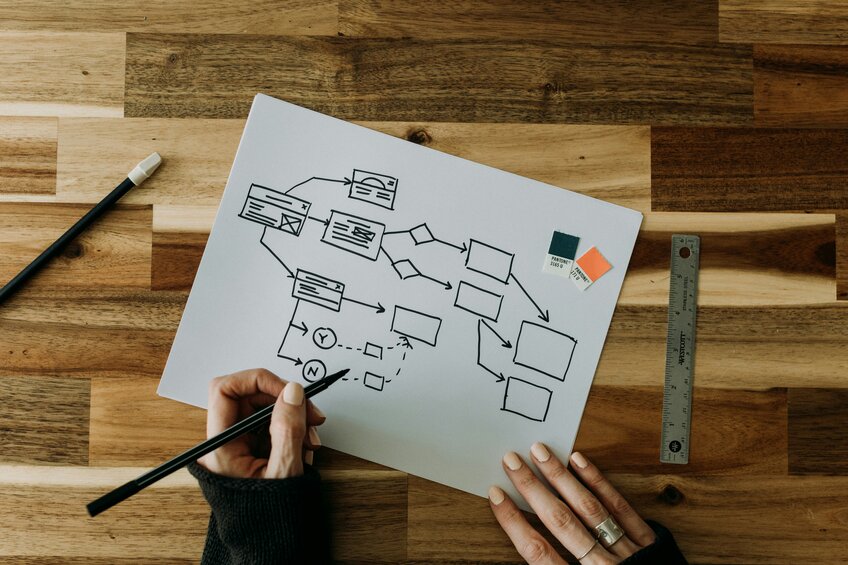

CHAID is a statistical technique designed to discover relationships between variables. At its core, CHAID builds a predictive model, often visualized as a tree, to determine how variables best merge to explain an outcome in a dependent variable. Unlike many other techniques, CHAID can handle nominal, ordinal, and even continuous data, offering a versatile approach to data analysis.

How Does CHAID Work?

The Building Blocks of CHAID Analysis

2. Parent Node: Once the target variable is identified, CHAID splits it into two or more categories, known as the parent or initial nodes. Using our bank example, categories like high, medium, and low risk could serve as parent nodes.

3. Child Node: These are the categories of independent variables that fall under the parent nodes in the CHAID tree.

4. Terminal Node: As the name suggests, these are the final categories in the CHAID tree. The most influential category on the dependent variable appears first, with the least important coming last, hence the term 'terminal node'.

Merging in CHAID Analysis

- For continuous dependent variables: The F-test is utilized.

- For categorical dependent variables: The chi-square test comes into play.

Each pair of predictor categories is assessed to determine the least significant difference concerning the dependent variable. This merging process results in a Bonferroni adjusted p-value for the merged cross-tabulation.

Why Choose CHAID?

One of the primary advantages of CHAID over other techniques, like regression analysis, is its flexibility. CHAID doesn't require data to be normally distributed, making it a more versatile tool for various datasets.

Conclusion

CHAID stands as a testament to the evolution of statistical methods, offering a unique lens to view and understand data. Whether you're a seasoned data scientist or a budding researcher, CHAID provides a robust framework to decode complex relationships within datasets. As we continue to generate more data in this digital age, tools like CHAID will undoubtedly play a pivotal role in turning raw data into actionable insights.

Having journeyed through the complexities of CHAID, the importance of a robust analytical tool becomes clear. While the theory is essential, practical application is where the real magic happens. With Julius.ai, you can seamlessly apply CHAID techniques, ensuring precision and clarity in your results. Transition from understanding to application effortlessly with Julius.ai at your side.